If your company handles a significant amount of data, chances are that using AI (Artificial Intelligence) for cybersecurity is an inevitable future. The advantages can far outweigh the risks if implemented with careful, purposeful planning – and a whole lot of failsafes. While significant advances in AI technology have been made, it is by no means to be considered infallible or a replacement for human experts. Educating yourself and your staff will be of the utmost importance to help ensure the proper protocols are in place, and the right people are in the right roles.

In the early 2000s, machine learning algorithms began to gain wider acceptance in the cybersecurity industry, particularly in the areas of intrusion detection and malware detection. Researchers began using machine learning algorithms to analyze vast amounts of data from network traffic, system logs, and other sources, in order to identify patterns of behavior that might indicate an attack. In recent years, the use of AI in cybersecurity has continued to expand, with new techniques and algorithms being developed to address the ever-evolving threat landscape.

Machine Learning Algorithms

A machine learning algorithm is the method by which the AI system conducts its task, generally predicting output values from given input data. There are three primary types of machine learning algorithms to be familiar with:

- Supervised Learning – This is the most commonly used type of machine learning. In supervised learning, a model is trained on labeled data, which means the data is already labeled with the correct output or result. The goal of the model is to learn from this labeled data and predict the correct output when given new, unlabeled data.

- Unsupervised Learning – In unsupervised learning, the data is not labeled, and the model has to find patterns and relationships on its own. The goal of unsupervised learning is to find hidden structures or groupings in the data without being told what they are.

- Reinforcement Learning – In reinforcement learning, the model learns through trial and error. The model interacts with an environment and receives feedback in the form of rewards or punishments based on its actions. The goal of reinforcement learning is to learn the best behavior or action to take in each situation to maximize rewards.

Deep Learning is a subset of machine learning that involves training artificial neural networks to learn from data. These neural networks are inspired by the structure and function of the human brain and are capable of learning complex patterns and relationships in data. Deep learning is especially useful for tasks that involve large amounts of data and complex relationships between variables.

Machine Learning Models

A machine learning model is a file that has been trained to recognize certain types of patterns. You train a model over a set of data, providing it an algorithm that it can use to reason over and learn from those data. There are many different types of machine learning models, each with its own strengths and weaknesses. Some of the more common types with uses in cybersecurity are:

Large Language Model (LLM) – Undoubtedly one of the hottest topics in the world, LLM is a type of machine learning model that has been trained on a massive corpus of text data, typically using deep learning techniques. The goal of an LLM is to learn patterns and relationships in natural language text data, such as sentence structure, grammar, and meaning. The best example of a well-known LLM is OpenAI’s GPT. LLMs have a wide range of potential applications, such as language translation, text summarization, language modeling, and even creative writing. They are also increasingly being used as the basis for conversational agents, chatbots, and other natural language processing applications, as they can generate highly realistic and natural-sounding responses to user input.

Convolutional Neural Networks (CNN’s) – A deep learning model commonly used for image and video recognition tasks. They are inspired by the structure of the visual cortex in animals and are designed to process input data with a grid-like topology, such as images. CNNs have been highly successful in a wide range of applications, including image recognition, object detection, and natural language processing.

Artificial Neural Networks (ANN’s) – A type of machine learning model that is loosely inspired by the structure and function of biological neurons in the brain. ANNs consist of interconnected nodes or neurons that process and send information in a parallel and distributed manner. ANNs have been used in a wide range of applications, including image and speech recognition, natural language processing, and robotics.

Linear Regression – A type of supervised learning model in machine learning that is used to predict a continuous output variable based on one or more input variables. It models the relationship between the input variables and the output variable as a linear equation. Linear regression is a simple yet powerful model that can be used for a wide range of applications, such as sales forecasting, stock price prediction, and weather forecasting. It is a popular first step in machine learning because of its simplicity and interpretability.

Clustering – A type of unsupervised learning in machine learning that involves grouping similar data points together into clusters based on their similarities or differences. The goal of clustering is to discover hidden patterns or structures in the data without any prior knowledge of the labels or categories. Clustering is a powerful technique that has many applications in data mining, image processing, and natural language processing. Some examples include customer segmentation, anomaly detection, and topic modeling.

The Transformer Model – A type of neural network architecture used in natural language processing (NLP) and other sequential data tasks. It was first introduced in the paper “Attention Is All You Need” by Vaswani et al. in 2017 and has since become one of the most popular models for NLP tasks. The Transformer model has been used for a wide range of NLP tasks, including machine translation, text classification, and language modeling.

Generative Adversarial Networks (GAN’s) – A type of neural network architecture that consists of two components: a generator and a discriminator. GANs are used for generating synthetic data, such as images, videos, or music. The goal of the generator is to generate synthetic data that can fool the discriminator into thinking it is real, while the goal of the discriminator is to correctly classify the real and generated data. The goal of the generator is to generate synthetic data that can fool the discriminator into thinking it is real, while the goal of the discriminator is to correctly classify the real and generated data.

Recurrent Neural Networks (RNN’s) – A type of neural network architecture that is designed to handle sequential data, such as time-series data, text data, and speech data. Unlike feedforward neural networks, which process inputs in a fixed order, RNNs process inputs in a sequential order, one at a time, and keep a “memory” of the past inputs. RNNs have been used for a wide range of applications, including language modeling, speech recognition, machine translation, and image captioning. They are a powerful tool for handling sequential data and have shown great success in a wide range of applications.

Challenges/Risks

While AI has the potential to significantly enhance cybersecurity, there are several challenges that must be addressed to ensure that AI systems are effective, reliable, and secure. A few notable issues to prepare for are:

Adversarial attacks: Threat actors can use sophisticated techniques to manipulate AI models, making them behave in unexpected ways. This can allow attackers to bypass security measures or cause the AI system to make incorrect decisions.

- Model Poisoning: In AI this refers to the act of intentionally manipulating the training data of a machine learning model to degrade its performance or make it produce incorrect outputs. The attacker’s goal is to introduce malicious inputs that will trick the model into making incorrect predictions or classifications.

Data quality: AI systems require large amounts of high-quality data to function effectively. In the case of cybersecurity, this can be challenging, as many security incidents are rare or unique, making it difficult to train AI systems on relevant data.

Explainability: Many AI models are complex and difficult to understand, which can make it challenging for security professionals to explain how the system is making decisions. This can be particularly problematic in the context of cybersecurity, where it’s important to understand how the AI system is finding and responding to threats.

Bias: One of the most talked about challenges – AI models can be biased in various ways, which can lead to incorrect or unfair decisions. In cybersecurity, this can be particularly problematic, as biased decisions could result in security incidents being overlooked or incorrect actions being taken.

Scalability: AI systems can be computationally expensive and require large amounts of resources to run. This can make it challenging to deploy AI systems at scale in complex cybersecurity environments.

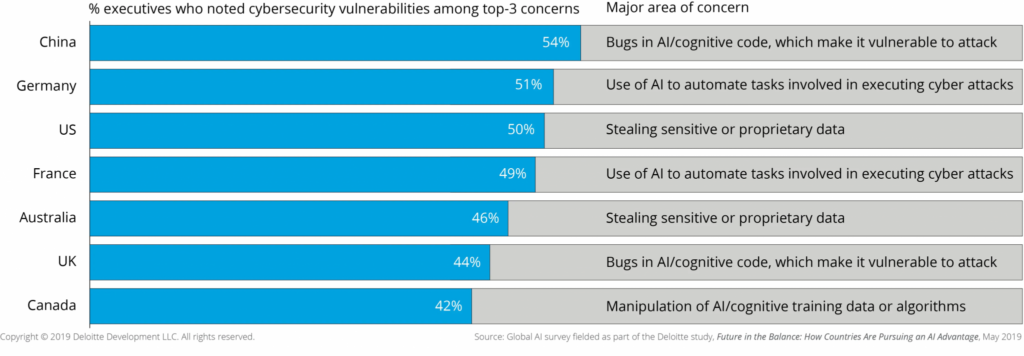

A study by Deloitte illustrated a list of concerns about various types of AI risks:

Mitigation

Mapping out risks for all components of an AI system is an important task that can help identify potential vulnerabilities and reduce the risk of unintended consequences or failures. Here are some steps you can take to map out risks for an AI system:

1. Identify the components of the AI system: Start by identifying all the components of the AI system, including hardware, software, data, and personnel.

2. Assess the risks associated with each component: For each component of the AI system, identify the potential risks and vulnerabilities. This may include technical risks, such as software bugs or hardware failures, as well as non-technical risks, such as data privacy or ethical considerations.

3. Identify potential failure modes: Consider how each component of the AI system could fail or malfunction, and what the consequences of those failures could be. This may involve conducting a failure mode and effects analysis (FMEA) or similar process.

4. Consider the interdependencies between components: Study how the various components of the AI system interact with each other and consider how failures or vulnerabilities in one component could affect the others.

5. Prioritize risks: Once you have identified the potential risks and vulnerabilities, prioritize them based on their likelihood and potential impact. This will help you focus on the most important risks and allocate resources accordingly.

6. Develop mitigation strategies: Develop strategies to mitigate the identified risks, such as implementing redundancy, improving security controls, or developing contingency plans.

7. Test and validate the system: Test the AI system under various conditions to show any additional risks or vulnerabilities. This may involve conducting penetration testing or other forms of security testing. For example, it is important for developers to implement robust data validation and quality control measures to prevent model poisoning attacks.

GIGO (“Garbage In, Garbage Out.”) is an important concept in computer science and refers to the fact that the quality of the output produced by a computer program is directly related to the quality of the input provided to it. In other words, if you provide a computer program with poor quality or inaccurate data, the output it produces will also be of poor quality or inaccurate. This is because the program can only work with the information it is given, and if that information is flawed or incomplete, the results it produces will be flawed or incomplete as well.

It is important to ensure that the data used in AI and machine learning is of high quality and properly curated to avoid the GIGO problem. This includes ensuring that the data is representative of the problem being solved, free from bias, and properly labeled and annotated to ensure that the algorithms can learn from it effectively.

Best Practices

As with any other procedure or framework, there are best practices that should be considered when using AI for cybersecurity:

1. Establish clear goals: Identify the specific cybersecurity goals you want to achieve with AI, such as improving threat detection, enhancing incident response, or automating routine tasks.

2. Ensure data quality: Ensure that the data you are using to train AI models is high quality, relevant, and free from bias. Use data quality checks, data cleansing, and data normalization techniques to improve the accuracy and effectiveness of AI models.

3. Use a layered approach: Use a layered approach to cybersecurity that combines AI with other security technologies, such as firewalls, intrusion detection systems, and security analytics. This can help improve the overall effectiveness of your security posture.

4. Monitor and update models: Monitor the performance of AI models on an ongoing basis and update them as necessary to ensure they remain effective and relevant.

5. Implement explainability and transparency: Ensure that AI models used for cybersecurity are transparent and explainable. This means that you should be able to understand how the models work and why they are making certain decisions or recommendations.

6. Ensure compliance with regulations and standards: Ensure that the use of AI in cybersecurity is compliant with relevant regulations and standards, such as GDPR, HIPAA, and NIST Cybersecurity Framework.

7. Train employees: Train employees on how to work with AI and how it can be used to enhance cybersecurity. This includes training on how to use AI-generated insights and recommendations, as well as how to identify and report potential AI-related issues.

8. Conduct regular risk assessments: Conduct regular risk assessments to identify potential AI-related risks and vulnerabilities. Use the results of these assessments to inform the development of risk management strategies and policies.

In Part 2 of our AI series, we’ll be taking a deep dive in to the first half of our most popular uses for AI in Cybersecurity, including:

- Intrusion detection and prevention systems (IDPS)

- Threat intelligence and analysis

- Cybersecurity analytics

- Network Traffic analytics

- Malware detection and analysis